Robots AI will soon be deployed on dating applications to flirt with people, write messages on behalf of users and write their profiles for them.

But depending on artificial intelligence to promote budding relationships, risks eroding the little human authenticity remains on meetings, have warned the experts.

Match Group, the world’s largest portfolio of meetings in the world, including Tinder and Hinge, announced that it increased investments in AI, with new products that were due this month. AI robots will be used to help users choose the most popular photographs, write messages to people and provide “efficient coaching for users in difficulty”.

But these “users in difficulty” who can be Missing in social skillsAnd starting to rely on AI assistants to create conversations for them, can have difficulty when real dates, without using their phone to help them converse. This could lead to anxiety and withdraw more in the comfort of the digital space, said a group of academics. It could also erode the confidence that users have in the authenticity of others on the application. Who uses AI and who is a real flesh and blood human blow behind the screen?

Dr. Luke Brunning, professor in ethics applied at the University of Leeds, coordinated An open letter calling for regulatory protections against AI on dating applications. He believes that trying to solve the social problems caused by technology with even more regulated technology will worsen things, and that the automated profile improvement also anchors a culture of dating applications where people feel that they must constantly surpass others to win.

“Many of these companies have correctly identified these social problems,” he said. “But they are looking for technology as a way to solve them, rather than trying to do things that really defuse competitiveness, [like] Make people easier for people to be vulnerable, easier for people to be imperfect, more accepting each other as ordinary people who are not more than 6 feet [tall] With a fantastic and interesting career, a well -written biography and a constant sense of full -minded jokes. Most of us are just not like that all the time.

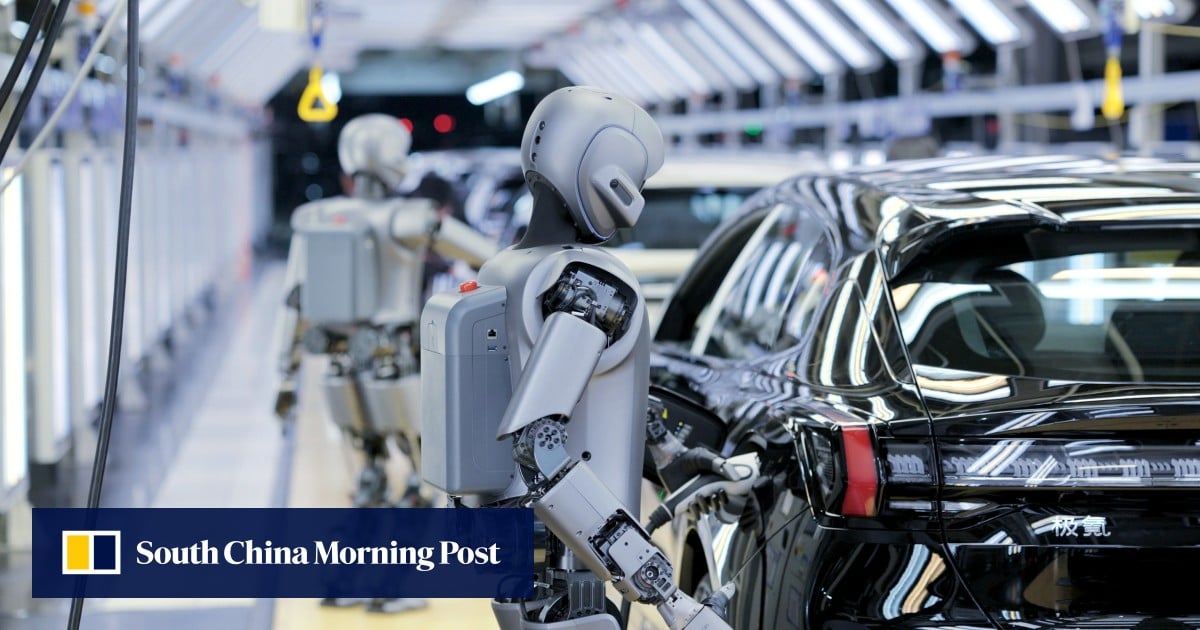

It is one of dozens of academics from all over the United Kingdom, as well as the United States, Canada and Europe, who have warned that the hasty adoption of the generative-Ai “can degrade an already precarious online environment”. AI on dating platforms risks multiple damage, they say, in particular the worsening of loneliness and mental health crises for young people, biases and exacerbating inequalities, and by further eroding the real social skills of people. They believe that the explosion of AI features on meeting applications must be regulated quickly.

In the United Kingdom alone, 4.9 million people use dating applications, with at least 60.5 million users in the United States. About three -quarters of dating applications are aged 18 to 34.

Many singles say it has never been so difficult to find a romantic relationship. However, the letter warns that the dating application has risks to further degrade the landscape: facilitate manipulation and deception, strengthening algorithmic biases around race and disability, and homogenizing profiles and conversations even more than they are currently.

But supporters of the AI dating application say that assistants and “dating wings“, As we know, could help reduce the fatigue of the dating application, professional exhaustion and the administrator to try to set up dates. Last year, product manager Aleksandr Zhadan Programmed chatgpt To slide and chat with more than 5,000 women on his behalf on Tinder. Finally, he met the woman who is now his fiancée.

Brunning says that he is not anti-application, but thinks that applications are currently working for companies rather than people. It is frustrated that the digital meeting sector receives such a small exam compared to other areas of online life, such as social media.

“The regulators wake up on the need to think of social media, and they care about the social impact of social media, its effect on mental health. I am just surprised that the dating applications were not withdrawn in this conversation.

“In many ways, [dating apps] are very similar to social media, “he said. “In many other respects, they explicitly target our most intimate emotions, our strongest romantic desires. They should draw the attention of regulators. »»

A spokesperson for the Match group said: “At Match Group, we are committed to using ethically and responsible AI, by placing users’ security and well-being at the heart of our strategy … Our teams are devoted to the design of AI experiences that respect the confidence of users and to align themselves on the mission of the match group to stimulate significant, inclusive and effective.” A spokesperson for Bumble said: “We see opportunities for AI to help improve safety, optimize user experiences and allow people to better represent their most authentic ego online while remaining focused on ethical and responsible use. Our goal with AI is not to replace love or meetings with technology, it is to make human connection better, more compatible and safer. »»

Ofcom stressed that the online security law applies to harmful generative chatbots. A spokesperson for OFCOM said: “In force, the UK online security law will put new tasks on platforms to protect their users from illegal content and activity. We have been clear about how law applies to Genai, and we have established what platforms can do to protect their users from not, he poses by testing AI models for vulnerabilities. »»