This is the most fundamental change to computing since the early days of the World Wide Web. Just as companies completely rebuilt their computer systems to accommodate the new commercial internet in the 1990s, they are now rebuilding from the bottom up — from tiny components to the way that computers are housed and powered — to accommodate artificial intelligence.

Big tech companies have constructed computer data centers all over the world for two decades. The centers have been packed with computers to handle the online traffic flooding into the companies’ internet services, including search engines, email applications and e-commerce sites.

But those facilities were lightweights compared with what’s coming. Back in 2006, Google opened its first data center in The Dalles, Ore., spending an estimated $600 million to complete the facility. In January, OpenAI and several partners announced a plan to spend roughly $100 billion on new data centers, beginning with a campus in Texas. They plan to eventually pump an additional $400 billion into this and other facilities across the United States.

The change in computing is reshaping not just technology but also finance, energy and communities. Private equity firms are plowing money into data center companies. Electricians are flocking to areas where the facilities are being erected. And in some places, locals are pushing back against the projects, worried that they will bring more harm than good.

For now, tech companies are asking for more computing power and more electricity than the world can provide. OpenAI hopes to raise hundreds of billions of dollars to construct computer chip factories in the Middle East. Google and Amazon recently struck deals to build and deploy a new generation of nuclear reactors. And they want to do it fast.

Google’s A.I. chips on a circuit board. The company needs thousands of these chips to build its chatbots and other A.I. technologies.

Christie Hemm Klok for The New York Times

The bigger-is-better mantra was challenged in December when a tiny Chinese company, DeepSeek, said it had built one of the world’s most powerful A.I. systems using far fewer computer chips than many experts thought possible. That raised questions about Silicon Valley’s frantic spending.

U.S. tech giants were unfazed. The wildly ambitious goal of many of these companies is to create artificial general intelligence, or A.G.I. — a machine that can do anything the human brain can do — and they still believe that having more computing power is essential to get there.

Amazon, Meta, Microsoft, and Google’s parent company, Alphabet, recently indicated that their capital spending — which is primarily used to build data centers — could top a combined $320 billion this year. That’s more than twice what they spent two years ago.

The New York Times visited five new data center campuses in California, Utah, Texas and Oklahoma and spoke with more than 50 executives, engineers, entrepreneurs and electricians to tell the story of the tech industry’s insatiable hunger for this new kind of computing.

“What was probably going to happen over the next decade has been compressed into a period of just two years,” Sundar Pichai, Google’s chief executive, said in an interview with The Times. “A.I. is the accelerant.”

New computer chips for new A.I.

The giant leap forward in computing for A.I. was driven by a tiny ingredient: the specialized computer chips called graphics processing units, or GPUs.

Companies like the Silicon Valley chipmaker Nvidia originally designed these chips to render graphics for video games. But GPUs had a knack for running the math that powers what are known as neural networks, which can learn skills by analyzing large amounts of data. Neural networks are the basis of chatbots and other leading A.I. technologies.

How A.I. Models Are Trained

By analyzing massive datasets, algorithms can learn to distinguish between images, in what’s called machine learning. The example below demonstrates the training process of an A.I. model to identify an image of a flower based on existing flower images.

Sources: IBM and Cloudflare

The New York Times

In the past, computing largely relied on chips called central processing units, or CPUs. These could do many things, including the simple math that powers neural networks.

But GPUs can do this math faster — a lot faster. At any given moment, a traditional chip can do a single calculation. In that same moment, a GPU can do thousands. Computer scientists call this parallel processing. And it means neural networks can analyze more data.

“These are very different from chips used to just serve up a web page,” said Vipul Ved Prakash, the chief executive of Together AI, a tech consultancy. “They run millions of calculations as a way for machines to ‘think’ about a problem.”

So tech companies started using increasingly large numbers of GPUs to build increasingly powerful A.I. technologies.

Difference between CPU and GPU-powered computers

Sources: Nvidia, IBM and Cloudflare

The New York Times

Along the way, Nvidia rebuilt its GPUs specifically for A.I., packing more transistors into each chip to run even more calculations with each passing second. In 2013, Google began building its own A.I. chips.

These Google and Nvidia chips were not designed to run computer operating systems and could not handle the various functions for operating a Windows laptop or an iPhone. But working together, they accelerated the creation of A.I.

“The old model lasted for about 50 years,” said Norm Jouppi, a Google engineer who oversees the company’s effort to build new silicon chips for A.I. “Now, we have a completely different way of doing things.”

The closer the chips, the better.

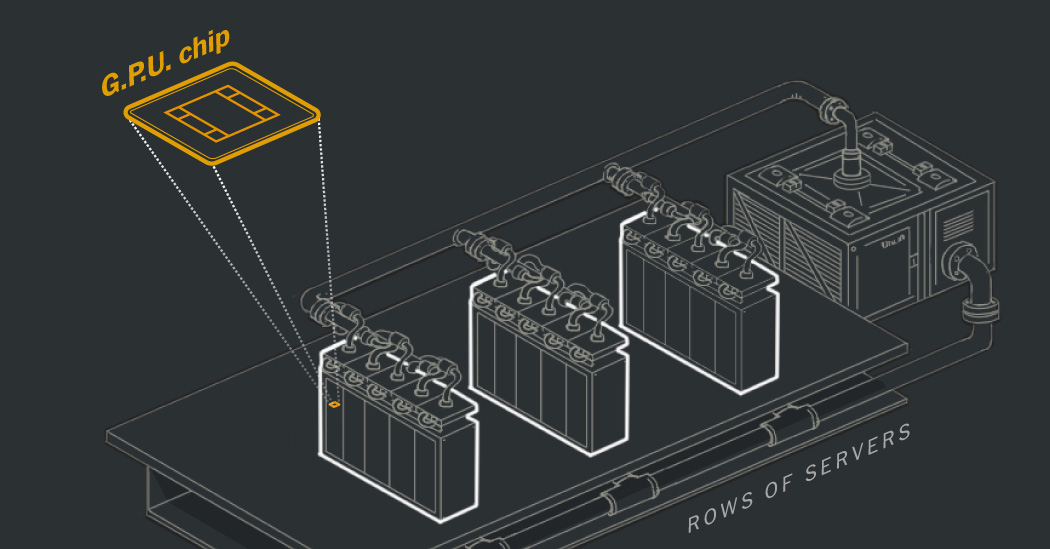

It is not just the chips that are different. To get the most out of GPUs, tech companies must speed the flow of digital data among the chips.

“Every GPU needs to talk to every other GPU as fast as possible,” said Dave Driggers, the chief technology officer at Cirrascale Cloud Services, which operates a data center in Austin, Texas, for the Allen Institute for Artificial Intelligence, a prominent A.I. research lab.

The closer the chips are to one another, the faster they can work. So companies are packing as many chips into a single data center as they can. They have also developed new hardware and cabling to rapidly stream data from chip to chip.

Meta’s Eagle Mountain data center sits in a valley beneath Utah’s Lake Mountains, south of Salt Lake City. Meta broke ground on this building after the A.I. boom erupted.

Christie Hemm Klok for The New York Times

That is changing how data centers — which are essentially big buildings filled with racks of computers stacked on top of one another — work.

In 2021, before the A.I. boom, Meta opened two data centers an hour south of Salt Lake City and was building three more there. These facilities — each the size of the Empire State Building, laid on its side across the desert — would help power the company’s social media apps, such as Facebook and Instagram.

But after OpenAI released ChatGPT in 2022, Meta re-evaluated its A.I. plans. It had to cram thousands of GPUs into a new data center so they could churn through weeks and even months of calculations needed to build a single neural network and advance the company’s A.I.

“Everything must function as one giant, data-center-sized supercomputer,” said Rachel Peterson, Meta’s vice president of data centers. “That is a whole different equation.”

Within months, Meta broke ground on a sixth and seventh Utah data center beside the other five. In these 700,000-square-foot facilities, technicians filled each rack with hardware used to train A.I., sliding in boxy machines packed with GPUs that can cost tens of thousands of dollars.

In 2023, Meta incurred a $4.2 billion restructuring charge, partly to redesign many of its future data center projects for A.I. Its activity was emblematic of a change happening across the tech industry.

A.I. machines need more electricity. Much more.

New data centers packed with GPUs meant new electricity demands — so much so that the appetite for power would go through the roof.

In December 2023, Cirrascale leased a 139,000-square-foot traditional data center in Austin that drew on 5 megawatts of electricity, enough to power about 3,600 average American homes. Inside, computers were arranged in about 80 rows. Then the company ripped out the old computers to convert the facility for A.I.

The 5 megawatts that used to power a building full of CPUs is now enough to run just eight to 10 rows of computers packed with GPUs. Cirrascale can expand to about 50 megawatts of electricity from the grid, but even that would not fill the data center with GPUs.

And that is still on the small side. OpenAI aims to build about five data centers that top the electrical use of about three million households.

Cirrascale’s data center in Austin, Texas, draws on 5 megawatts of electricity, which can power eight to 10 rows of computers packed with GPUs.

Christie Hemm Klok for The New York Times

It’s not just that these data centers have more gear packed into a tighter space. The computer chips that A.I. revolves around need far more electricity than traditional chips. A typical CPU needs about 250 to 500 watts to run, while GPUs use up to 1,000 watts.

Building a data center is ultimately a negotiation with the local utility. How much power can it provide? At what cost? If it must expand the electrical grid with millions of dollars in new equipment, who pays for the upgrades?

Data centers consumed about 4.4 percent of total electricity in the United States in 2023, or more than twice as much power as the facilities used to mine cryptocurrencies. That could triple by 2028, according to a December report published by the Department of Energy.

Power consumption by A.I. data centers

The Energy Department estimates that A.I.-specialized data centers could consume as much as 326 terawatt-hours by 2028, nearly eight times what they used in 2023.

Source: Lawrence Berkeley National Laboratory, Energy Department

The New York Times

“Time is the currency in the industry right now,” said Arman Shehabi, a researcher at the Lawrence Berkeley National Laboratory who led the report. There is a rush to keep building, he said, and “I don’t see this slowing down in the next few years.”

Data center operators are now having trouble finding electrical power in the United States. In areas like Northern Virginia — the world’s biggest hub of data centers because of its proximity to underwater cables that shuttle data to and from Europe — these companies have all but exhausted the available electricity.

Some A.I. giants are turning to nuclear power. Microsoft is restarting the Three Mile Island nuclear plant in Pennsylvania.

Others are taking different routes. Elon Musk and xAI, his A.I. start-up, recently bypassed clean energy in favor of a quicker solution: installing their own gas turbines at a new data center in Memphis.

“My conversations have gone from ‘Where can we get some state-of-the-art chips?’ to ‘Where can we get some electrical power?’” said David Katz, a partner with Radical Ventures, a venture capital firm that invests in A.I.

A.I. gets so hot, only water can cool it down.

These unusually dense A.I. systems have led to another change: a different way of cooling computers.

A.I. systems can get very hot. As air circulates from the front of a rack and crosses the chips crunching calculations, it heats up. At Cirrascale’s Austin data center, the temperature around one rack started at 71.2 degrees Fahrenheit on the front and ended up at 96.9 degrees on the back side.

If a rack isn’t properly cooled down, the machines — and potentially the whole data center — are at risk of catching fire.

Just outside Pryor, a farm-and-cattle town in the northeast corner of Oklahoma, Google is solving this problem on a massive scale.

Thirteen Google data centers rise up from the grassy flatlands. This campus holds tens of thousands of racks of machines and uses hundreds of megawatts of electricity streaming from metal-and-wire power stations installed between the concrete buildings. To keep the machines from overheating, Google pumps cold water through all 13 buildings.

In the past, Google’s water pipes ran through empty aisles beside the racks of computers. As the cold water moved through the pipes, it absorbed the heat from the surrounding air. But when the racks are packed with A.I. chips, the water isn’t close enough to absorb the extra heat.

Source: SimScale thermodynamics

The New York Times

Google now runs its water pipes right up next to the chips. Only then can the water absorb the heat and keep the chips working.

Source: SimScale thermodynamics

The New York Times

Pumping water through a data center filled with electrical equipment can be risky since water can leak from the pipes onto the computer hardware. So Google treats its water with chemicals that make it less likely to conduct electricity — and less likely to damage the chips.

Once the water absorbs the heat from all those chips, tech companies must also find ways of cooling the water back down.

In many cases, they do this using giant towers sitting on the roof of the data center. Some of the water evaporates from these towers, which cools the rest of it, much as people are cooled when they sweat and the sweat evaporates from their skin.

“That is what we call free cooling — the evaporation that happens naturally on a cool, dry morning,” said Joe Kava, Google’s vice president of data centers.

Inside a Google data center, which is packed with computers that use Google’s A.I. chips.

Christie Hemm Klok for The New York Times

Google and other companies that use this technique must keep replenishing the water that pumps through the data center, which can strain local water supplies.

Google data centers consumed 6.1 billion gallons of water in 2023, up 17 percent from the previous year. In California, a state that faces drought, more than 250 data centers consume billions of gallons of water annually, raising alarm bells among local officials.

Some companies, including Cirrascale, use massive chillers — essentially air-conditioners — to cool their water instead. That reduces pressure on the local water supply, because they reuse virtually all of the water. But the process requires more electrical power.

There is little end in sight. Last year, Google broke ground on 11 data centers in South Carolina, Indiana, Missouri and elsewhere. Meta said its newest facility, in Richland Parish, La., would be big enough to cover most of Central Park, Midtown Manhattan, Greenwich Village and the Lower East Side.

“This will be a defining year for AI,” Mark Zuckerberg, Meta’s chief executive, said in January in a Facebook post that concluded, “Let’s go build!”