“I felt like I was alone,” Ruma, 31, told this week in Asia. “I had to collect the evidence myself and even identify the culprit … I felt like I was doing the work of the police.”

The suspect, a former student of the college, was surveying by park, had targeted 61 women, distributing 1,852 explicit images generated by AI via the Telegram messaging application. Park and his accomplice, known as Kang, qualified as “photo composition experts”.

In September, Park and Kang were sentenced to 10 years and four years in prison respectively. Later, they made a call and Friday, a court of appeal reduced their convictions to nine years for the park and three years six months for Kang, taking into account that they had concluded a settlement with some of the victims.

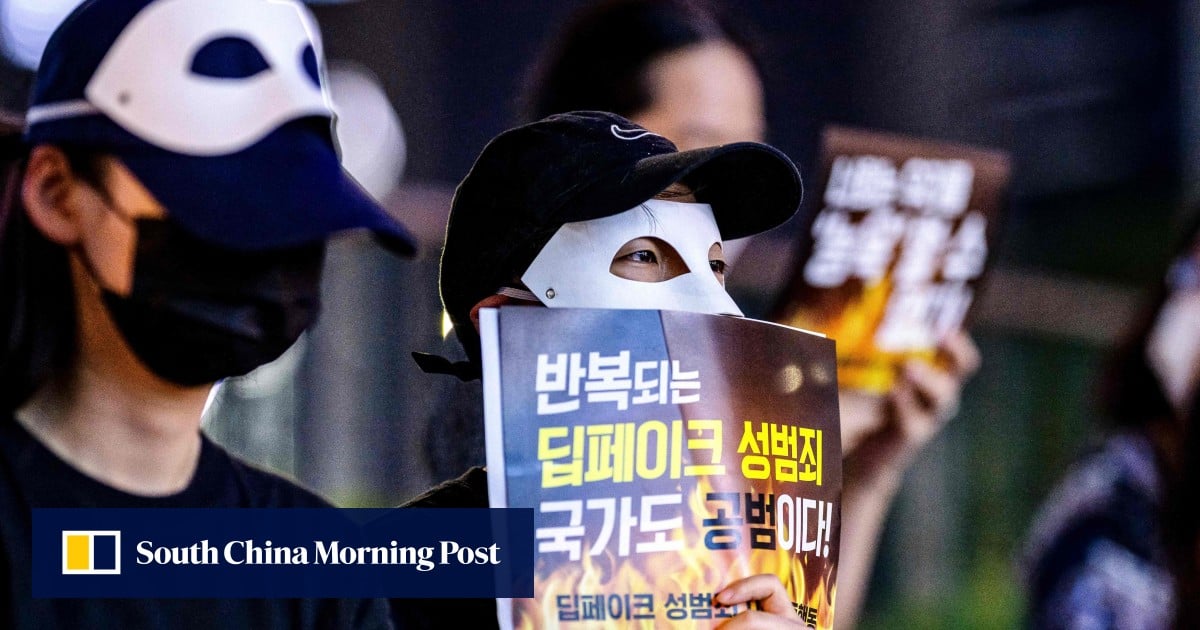

The case of Ruma is emblematic of an increase in digital sexual crimes in South Korea. More than 18,000 cases of this type were documented in 2024 – an increase of 12.7% compared to the previous year – according to gender equality and the country’s family ministry.

The explosion of Deepfake technology is particularly alarming used in these crimes, such cases increasing by 227% last year only. Deepfakes uses AI to imitate the face, voice or actions of a person – with victims often inserted in the pornographic content made.