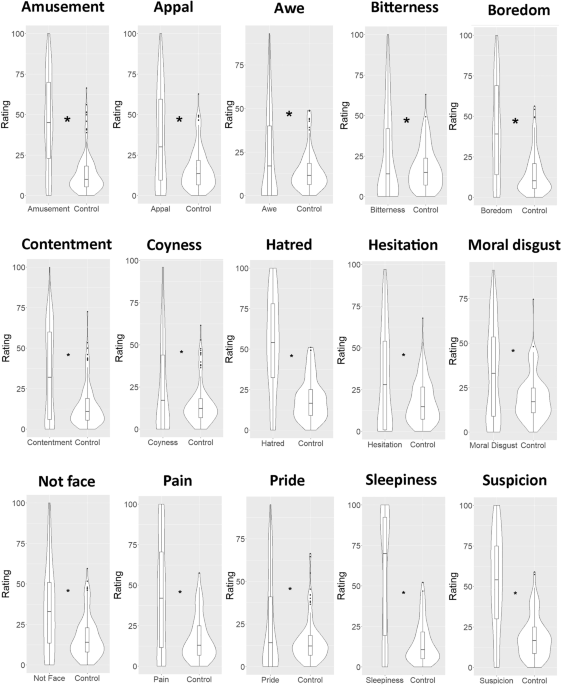

In summary, the results showed that the android Nikola could produce complex facial expressions of emotion. Seventeen emotion expressions were judged correct by at least one sample (German or Japanese), while thirteen emotion expressions were correctly identified by both samples, compared to the ratings of the incorrect expressions. Post-hoc analyzes further suggest that twelve of these seventeen emotions were among the highest-rated emotions in at least one sample. Combined with research on facial expressions of six basic emotions10Nikola is thus able to produce a variety of recognizable expressions. An android capable of replicating recognizable human facial expressions may have a wide range of applicability in social robotics, with a focus on emotional interaction, such as customer service, caregiving, or nursing. Since Nikola’s expressions are not perceived as strange compared to a human’s expressions.22.23Nikola already addresses a common limitation of human-like robots, namely the uncanny valley effect that can undermine trust and affective interaction.24.25.

Four of the expressions were rated higher as target emotion compared to non-target emotions by a single sample: specifically, bitterness and pride for the Japanese sample, and confusion and relief for the German sample . The expression of emotions is culturally filtered, for example in their intensity26,27.28which may lead to differences in expression and therefore in the evaluation of expressions of emotion. Additionally, the effect sizes of these four expressions tend to be relatively small compared to other expressions, indicating that they may be more difficult to recognize in general.

Even when the target emotions differed significantly from the top-ranked emotions, they were nevertheless conceptually similar: for example, bitterness was considered contempt, moral disgust as hatred or contempt, and relief as amusement . These results suggest that even when Nikola’s expression is not optimally recognized, the valence of these expressions is nonetheless communicated. As the recognition of emotions may depend on other factors such as the social situation in which it is expressed29identifying the correct valence as well as the appropriate social scenario can facilitate the correct recognition of emotions in a social context.

Five of Nikola’s facial expressions were not detected relative to nontarget emotions by either sample. For these expressions, the highest-rated nontarget emotions also tended to be conceptually different from the target emotions (e.g., embarrassment was rated as amusement, flirtation as suspicion, pride as contentment, contempt as confusion or shame such as bitterness), which further indicates, the emotions had not been attributed correctly. There may be several reasons for this. First, Nikola may suffer from some hardware limitations for specific facial gestures. For example, the affectionate face includes a kissing mouth that uses a high intensity of AU 18 (lip puckers) which Nikola’s hardware may be unable to reproduce. Additionally, the expression of contempt involves looking down on the interaction partner by raising the head, which may be limited by Nikola’s ability to lower his eyes sharply. Additionally, some movements may have been misinterpreted or obscured Nikola’s face. Second, the correct identification of emotional expressions also depends on the social or multimodal context.29 which was not present in this study. For example, the expression of embarrassment uses a soothing smile30 which may not have been recognized as such (and rather as an ordinary smile) without social context. Additionally, multimodal information (e.g., saying “ouch” to a painful face) can further improve the ability to correctly identify the expression. Third, the selected AUs may have been inadequate for the expression of emotions: for the expression of confusion, only one study was found19which may have provided insufficient information about the AUs necessary for the confusion. However, such explanations are largely speculative and would require further research.

Our results also have implications for future research, such as Nikola being a valuable tool in face processing research. Research on face perception is often limited by the lack of stimulus variety and the inability to easily control certain stimulus variables (e.g., speed or movement sequence of the AU). Nikola can provide an embodied agent whose facial expression variables such as AU sequence can be easily controlled, enabling the creation of facial expression stimuli that would be difficult to recreate with human expressions (e.g., for asynchrony of Facial AU movement and processing configured31). Thus, Nikola makes it easy to investigate new research questions.

One limitation of the study is the controlled representation of emotional expressions. The videos lacked social and multimodal context, which could further improve the ability to identify emotion expression. Further research could aim to provide social context (i.e., simulating a social environment in which the respective emotional reaction would be appropriate) or additional multimodal information (e.g., the “ouch” sound when expression of pain).

Another limitation lies in the use of rating scales to assess emotion detection. In this study, emotion labels with relative proportions were used to evaluate detection. This method does not limit users’ response options to just one, which has been criticized in other methods such as choosing among array tasks.14 which can be particularly limiting with an increasing number of expressions or labels. However, this method still limits participants’ options to the labels presented. Although an emotional expression may be rated as such relative to a participant’s non-target emotions, this does not mean that the participant would correctly rate the emotion without verbal references. A future study could attempt to measure recognized emotions using other measures, such as choosing from an array or free labeling (albeit with a more restricted selection of emotion expressions).

Furthermore, comparing the ranking of target emotions to mean scores would be biased by the high dissimilarity of expression with non-target emotions. Significant differences may then arise due to dissimilarity of nontarget emotions rather than similarity of the target emotion. Therefore, post-hoc analyzes on emotion ranking provide additional information about whether a target emotion was significantly different from the mean because it was among the highest ranked emotions.

Correct identification of emotion expressions was here measured by verbal labels commonly used for facial emotion rating tasks.10,14,16. Such findings may be supported by indirect measures such as physiological or behavioral responses to facial expressions of emotion. However, research on physiological and behavioral responses to complex emotional expressions is too sparse to use specific responses validated for complex expressions. Future research could attempt to use Nikola’s expressions alongside those of a human to determine whether the android’s expression elicits similar responses.