When Chatgpt arrived for the first time, we obtained a textual chatbot which could try to answer any question reasonably, even if it is wrong (this is always the case, because the hallucinations have not disappeared). It didn’t take AI for a long time to win new capacities. It could see things through photos and videos. He could hear humans speak and answer with his own voice.

The next step was to give AI’s eyes and ears that could observe your environment in real time. We already have smart glasses that do this, Ray-Ban meta-model. Google and others work on similar products. Apple could put cameras inside AirPods for the same reason.

The work will be complete when the AI will have a body to be physically present around us and help us with all kinds of tasks that require the manipulation of real objects. I saw the writing on the wall months ago when I said that I wanted a humanoid robots for the house.

More recently, I saw the type of AI model that would give robots intelligent to see and understand the physical world around them and interact with objects and actions on which they have never been trained. It was the Helix Vision-Language-Action (VLA) figure for the AI robots.

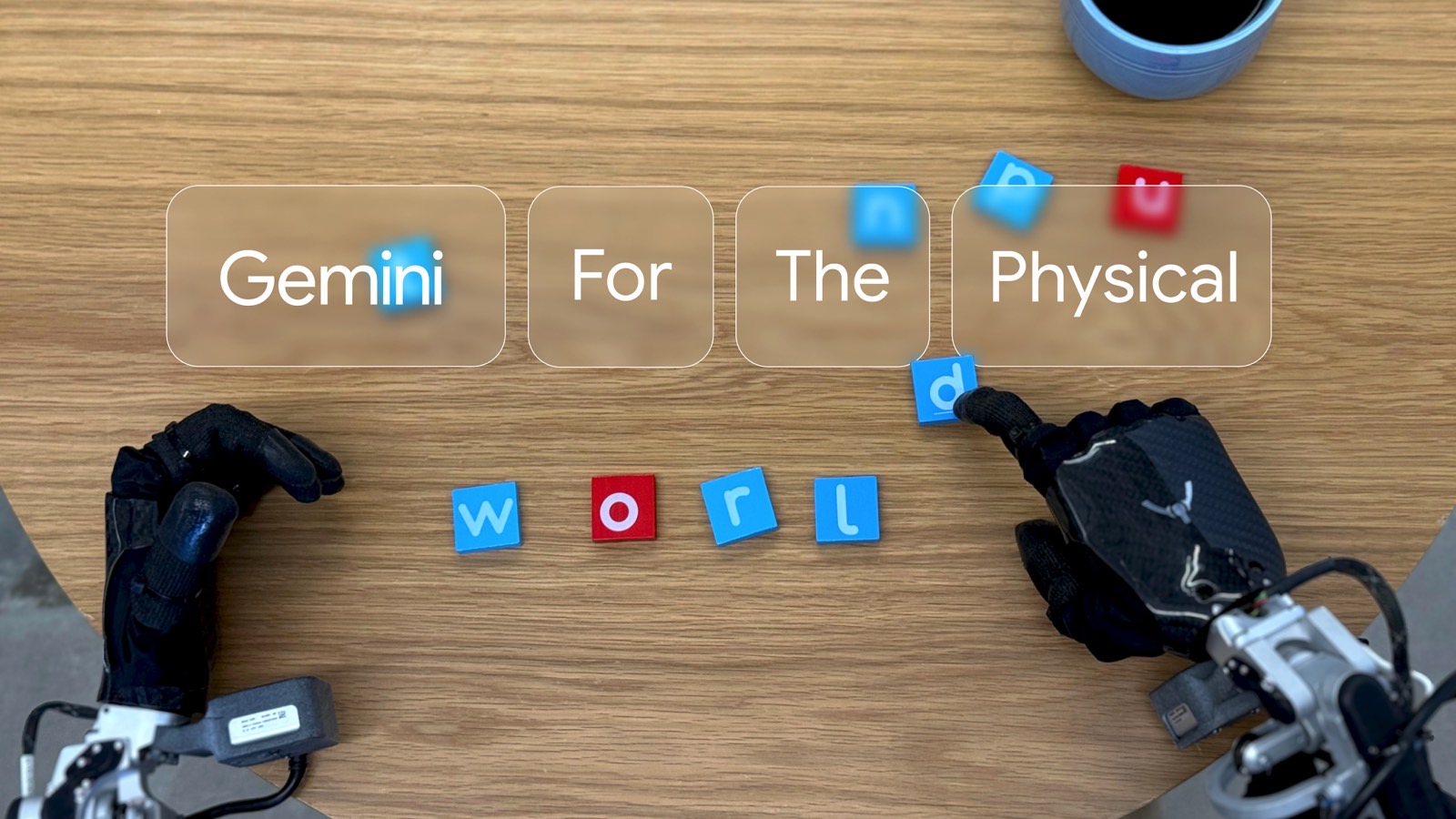

Unsurprisingly, others work on a similar technology, and Google has just announced two models of gemini robotics that blunt me. Like the figure technique, the gemini robotics will help robots understand human controls, their environment and what they have to do to perform the tasks that humans give them.

We are still at the beginning of the robotic AI, and it will take a while until the humanoid robot assistant that I want in the house is ready for mass consumption. But Google is already laid the foundations for this future.

Google Deepmind published A blog article And A research document Describing the new models Gemini Robotics and Gemini Robotics-Er that he developed at the back of Gemini 2.0 Tech. This is the most advanced Google generative program available for users at the moment.

Google Robotics is the VLA built on Gemini 2.0 “with the addition of physical actions as a new output modality in order to control the robots directly.”

The second is “a model of Gemini with an advanced spatial understanding, allowing Roboticians to manage their own programs by using the embodied reasoning capacities of Gemini”. His name is well Gemini Robotics-Er.

Due to the embodied reasoning, Google means that robots must develop “human capacity to understand and react to the world around us” and do it in complete safety.

Google has shared various videos that show AI robots in action, responding to natural language controls and adapting to landscape changes. Thanks to Gemini, robots can see their environment and understand natural language. They can then perform new tasks even if they would never have interacted with objects or places before.

Google explains the three principles that guided the development of Gemini Robotics. It is generality, interactivity and dexterity:

To be useful and useful for people, the models of Robotics need three main qualities: they must be general, which means that they can adapt to different situations; They must be interactive, which means that they can understand and respond quickly to instructions or changes in their environment; And they must be skillful, which means that they can do the kind of things that people can usually do with their hands and fingers, like carefully manipulating objects.

As you will see in the videos of this article, robots can recognize all kinds of objects on a table and perform tasks in real time. For example, a Slam Dunks robot a tiny basketball through a hoop when he was told.

AI robots can also adapt quickly to the changing landscape. Give bananas in a specific color basket on a table, the robots correctly carry out the task, even if the human moves this basket in a boring way.

Finally, AI robots can display fine motor skills, such as orgami folding or packaging a ziplock bag.

Google explains that the gemini robotics model works with all kinds of robot types, whether it is a bi-bras robotic platform or a humanoid model.

Gemini Robotics-Er is an equally brilliant AI technology for robotics. This model focuses on understanding the world so that robots can perform movements and tasks in the space they are supposed to perform actions. With Gemini Robotics-Er, the AI robots would use Gemini 2.0 to code (reason?) On the fly:

Gemini Robotics-Er improves the existing capabilities of Gemini 2.0 as 3D pointing and detection by a large margin. By combining spatial reasoning and gemini coding capacities, Gemini Robotics-Er can instantly instantly new on the fly. For example, when showing him a coffee cup, the model can intimate an appropriate two fingers to pick it up by the handle and a safe trajectory to approach it.

All of this is very exciting, at least for this IA enthusiast, even if I know that I have a lot of waiting to do until AI robots are supplied by such technology available in the trade.

Before you start to worry that AI robots become the enemy, as in movies, you should know that Google has also developed a Constitution of the robot In previous work to ensure that AI robots behave safely in their environment and prevent damage to humans. The security constitution is based on the three laws of the robotics of Isaac Asimov, with Google by implementing it to create a new frame which can be adjusted more via simple instructions in natural language:

We have since developed a framework to automatically generate data -oriented constitutions – rules expressed directly in natural language – to direct the behavior of a robot. This framework would allow people to create, modify and apply constitutions to develop safer and more aligned robots on human values.

You can find out more about gemini robotics models at this link.