Deepfakes simulates the resemblance of a person by capturing an image, a video or a few seconds of audio.

Columbus, Ohio – If you’ve spent time on social networks lately, you’ve probably noticed many dolls or action figures. Your family and friends – All cartoons dolls in packaging with their favorite accessories. It is the latest social media trend that uses artificial intelligence.

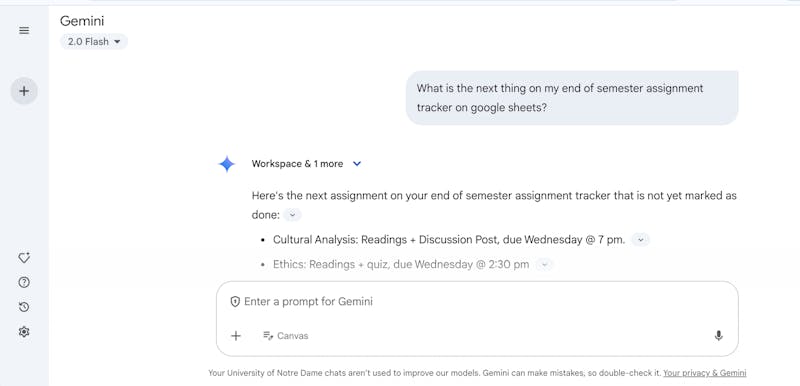

The trend requires people to use generative AI tools like chatgpt to create dolls or action figures, with accessories. Although it is a fun way to share your personality with friends, cybersecurity experts warn that there are confidentiality problems.

“It’s fun, but when you download a high quality image in a company that helps you create these images or dolls, you offer an irrevocable license to use your image,” said Rishabh Das, assistant teacher in emerging communication technologies at the University of Ohio in Athens.

“You never know when this company would be sold to a third party, then this license is somehow transferred,” he said.

DAS has kept an eye on the developments of artificial intelligence and how it can be used to rationalize workflows, improve communication and make Internet safer, but he also saw how criminals used it for bad.

Deepfakes are something that has developed as the technology to deceive someone’s resemblance has improved. Deepfakes simulates a person’s resemblance by capturing an image, a video or a few seconds of audio.

“Only a few seconds of video, images or your voice clip are absolutely sufficient for a criminal reproducing your behavior in terms of Defake Deep,” said DAS.

He said social media was also how much of this information can be cultivated.

“We like to publish. We like to share our life experiences with our friends, and unfortunately, the criminals use it to find out more about ourselves,” he said.

In the current state of things, there is no federal law protecting your data from the crooks or what is happening if this information is transformed into a profound an unwilling without your knowing.

In the Better Business Bureau of Central Ohio, Deepfake Scams brings the old grandmother back! Help!” Schools, but this time, the crook uses a copy of the voice of a loved one to be more convincing.

“It’s the same tactic:” I need money for a deposit, I am in prison, I have to pay a lawyer, “said Lee Anne Lanigan, Director of Investigation of the BBB in the Ohio center. “You can always hang up. You can always call someone. There is no reason to be polite with these people. Hang up. Call your person. I promise you that your person will resume and say that they are doing well. ”

She said that some families have found code words or sentences to prove that they are who are really if someone at the other end of the phone says they are family and start to ask for something that they would never have before.

Consumer Reports, who examined the use of the generator and Deepake technology, noted that it was almost impossible to erase your digital imprint online.

However, the easiest ways to protect you and protect your family from deep scams is to know that they exist, allow two -factor authentication on all financial accounts and simply make a intestine check. If that seems, this is probably the case.