You probably heard that Perplexity – a company of which Web scratch tactics I generally despise, and the only Bot of AI that we still block in Macstories – has deployed a IOS version of their vocal assistant which integrates with several native features of the operating system. Here is their promotional video in case you have missed it:

It’s a very Intelligent idea: although the other vocal modes of the other main LLMs are limited to having a conversation with the chatbot (with the type of quality and the flow of conversation which, frankly, destroyed Siri), the perplexity put a different turn: they used APIs and apple frameworks to make the conversations more usable (some may even say “agentic») And integrated into the Apple applications you use every day. I saw a lot of people call The vocal assistant of Perplexity “what Siri should be” or arguing that Apple should consider Perplexity as an acquisition target because of this, and I thought I shared additional comments and notes after playing with their vocal mode for a while.

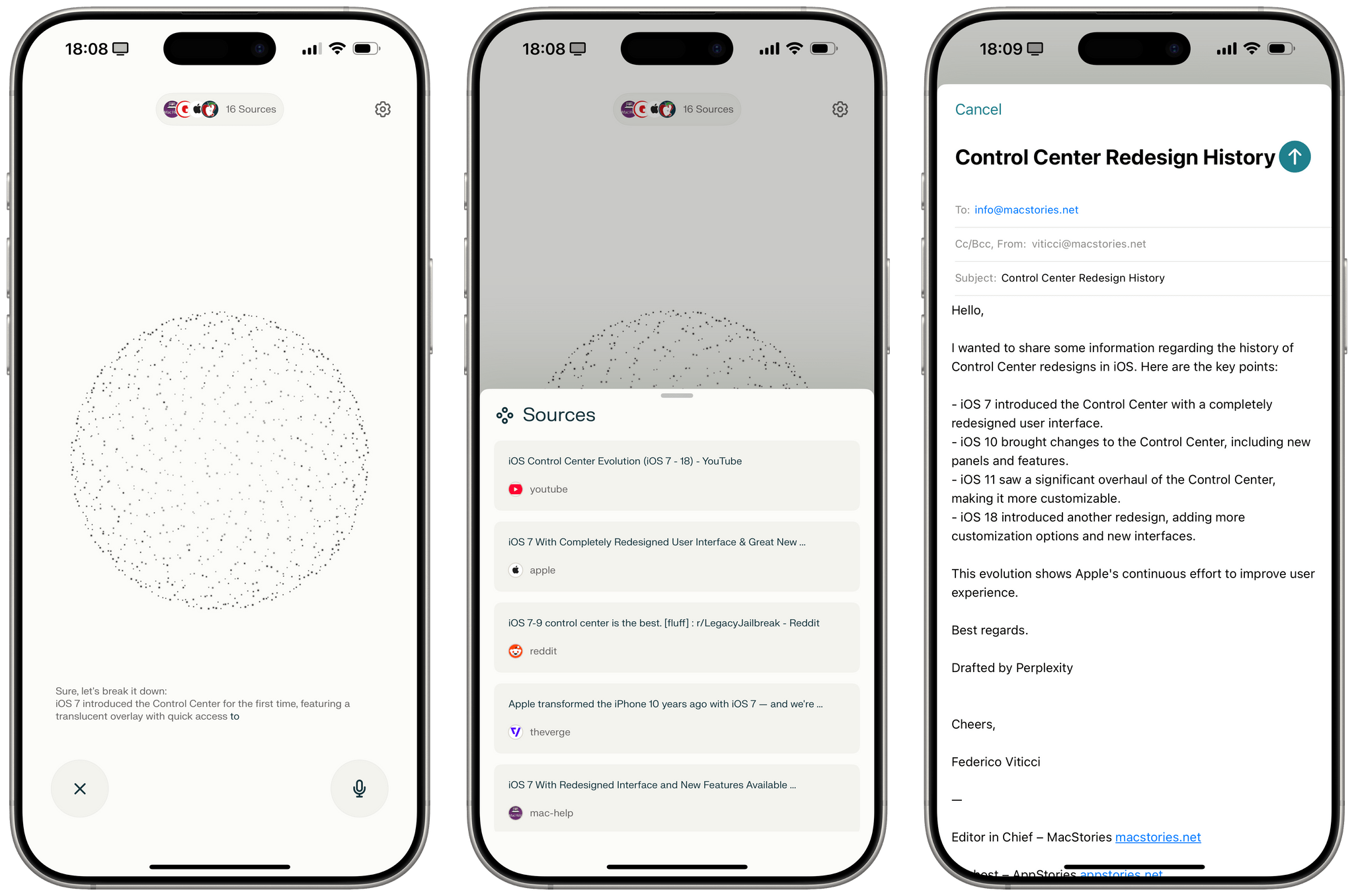

The most important point of this functionality is the fact that, with hindsight, it is so obvious And I am surprised that Optai has still not shipped the same functionality for their incredibly popular Chatppt vocal mode. The vocal assistant iOS of Perplexity does not use any “secret” or hidden API tips: they simply integrate into the existing frameworks and APIs which any The third -party iOS developer can already work with it. They take advantage of Eventkit for the recall / recovery and creation of calendar events; they use Mapkit to load online extracts from the locations of Apple cards; They use a native -compound sheet of email and Safari view controller To allow users to send pre-filled emails or browse web pages manually; They integrate into Musickit To play Apple Music songs, provided that the musical application is installed and an active subscription. Theoretically, there is nothing to prevent the perplexity from rolling additional executives such as Shazamkit,, Image Games Area,, Weather reportTHE clipboardor even Access to the library in their vocal assistant. The perplexity did not find a “flaw” to reproduce the Siri functionalities; They were only the first major AI company to do so.

However, this does not make their integration less impressive because it perfectly illustrates why people no longer have the patience to face the limits of Siri. In a post -LLM world, and especially now that the LLM have vocal modes, we expect to be able to have a long conversation with an assistant who fully understands the natural language, can keep the entire context of this one and can mix and correspond to tools such as web research, the context of the user and – in the case of perplexity – Applications to complete our requests. From this point of view, the vocal mode of Perplexity absolutely puts Siri shame (even the version with the integration of Chatgpt).

To give you two examples, I asked the following questions to Siri and perplexity:

Can you play the song playing in the OC when Seth Cohen wore a Spider-Man mask and suspended upside down?

Can you tell me how many times the iOS control center has received a major overhaul over the years?

Siri was downright useless, despite the fact that the integration of chatgpt activated. For the request of the control center, he returned certain unrelated results of Google Search, one of them being a link to the Alexa application on the App Store (???). For the musical request, he started playing a song entitled “Seth and Summer Forever” by Babygirl. Do.

The perplexity, on the other hand, caused the two requests. For the musical request, I repeated the question, but I added “do this on Youtube because I don’t have an Apple Music subscription” and, of course, he loaded a video of this scene in an integrated YouTube player. For the IOS history request, we have gone back and forth to confirm all the main conceptions of control centers over the years, to which Perplexity responded correctly, and when I asked the end to create a reminder for me to check all this information, that did that exactly.

You see, we are not talking about a random generative slap here: we are dealing with practical questions which, at this stage of 2025, I would expect any modern AI assistant to respond reliably and quickly using a variety of tools at its disposal. You may disagree with the principles of how these technologies were created first, but I think it is undeniable that Siri produces absolute waste while perplexity is really useful.

That being said, however, there is only so much of the vocal assistant of Perplexity, given the limitations of the platform; In addition, their existing integrations also need more work.

To start, their vocal assistant often cannot add maturity dates for reminders today (bizarre), and he cannot save reminders in specific lists of the Reminders application. I know that these features are possible to implement because there is a plethora third -party reminders customers This makes them work, so the problems are all in implementation by perplexity. As I mentioned above, there are also several other iOS executives that Perplexity has decided not to support in their first version of this product: technically, perplexity could also integrate into Homekit to control house accessories (one of the few things I use Siri for these days), but their assistant does not support this functionality. Then there are all the integrations that are exclusive to Siri, which perplexity cannot implement because Apple does not offer a related developer API. Only Siri can perform shortcuts, adjust the timers, call Apply instructionsSend messages, create notes, open and modify the peripheral settings, etc. If you ask me, these are all the main candidates for A certain government organization To force Apple to open in the name of fair competition. But, Hey: I am partial.

Looking at the overview for a second, I think Apple is in a precarious situation here. The fact that the company manufactures the best computers for AI is a double -edged sword: it is ideal for consumers, but these same consumers are increasingly using Apple devices as simple conduits for other companies, channeling their data, context and – above all – habits in systems that cannot be checked by Apple. If hundreds of millions of people get used to having really productive conversations with the vocal mode of Chatgpt on a daily basis, what happens when Openai returns the switch to the native iOS integrations in vocal assistants of their iPhone application if Apple does not enter this space for another year?

After the ingenious approach to Perplexity, Openai could do the funniest thing here. While Siri gets a chatgpt help (often with Bad results), Openai may decide to let the Chatgpt application integrate The rest of iOS. I am convinced that it will happen, and I hope Siri new leadership has a plan in place for this possibility.